Introduction

This post documents the process of optimizing a small problem – generating images of the Mandelbrot Set. Although I will show a speedup of ~8x over the naive implementation (there is a part 2 that will bring this to ~100x in some cases), this is mostly meant as an example of how I go about using my knowledge of software design and computer architecture to optimize software. This post is rather long, but many of the sections are independent of each other, so feel free to use the Contents to go to the sections of interest. In particular I spend some time setting up the problem, and the real optimizing starts here.

Contents

- Introduction

- Contents

- General notes

- Setting up the problem

- Parameters of problem

- First do the simple version

- Set up some tests

- Whether to optimize

- Are there any compiler flags that will help?

- Look at the assembler

- Sheep race optimization

- Interleaving

- Interleaving, take two

- Vectorizing

General notes

- This is not a cookbook – there is no cookbook for this. Optimization is an exploration, not a recipe, it is often more of an art than a science.

- While many techniques might be able to be applied to different problems, each problems will have its own, often unique, set of techniques that can be usefully applied.

- Measure, measure, measure to know whether a change is an improvement, and profile to know where to improve. Just because something looks like it might improve performance doesn’t mean that it does – I’ve had many years of practice and I still sometimes go down blind alleys.

- Optimization is never finished (except maybe for tiny problems, or where a solution is totally memory or I/O bound). Generally what happens is that diminishing returns kick in, in that it starts taking more and more effort to get smaller and smaller gains. Sometimes trying a whole different path might yield better results.

- This is not an academic paper – there has been no literature review, no peer review, there is no bibliography, and I’m not claiming that any of these ideas are novel.

- Although I use C++ for the examples, the techniques I use would work in any compiled language or assembler – C++, C, assembler, Fortran, Rust, Go, Java (if using a JIT), although vectorizing requires access to the intrinsics or a good vectorizing compiler. They would not offer anything useful in interpreted languages such as Python or Javascript.

- There is a repository with the code used for this here. The code shown here will be different as it has been simplified somewhat for this post.

Setting up the problem

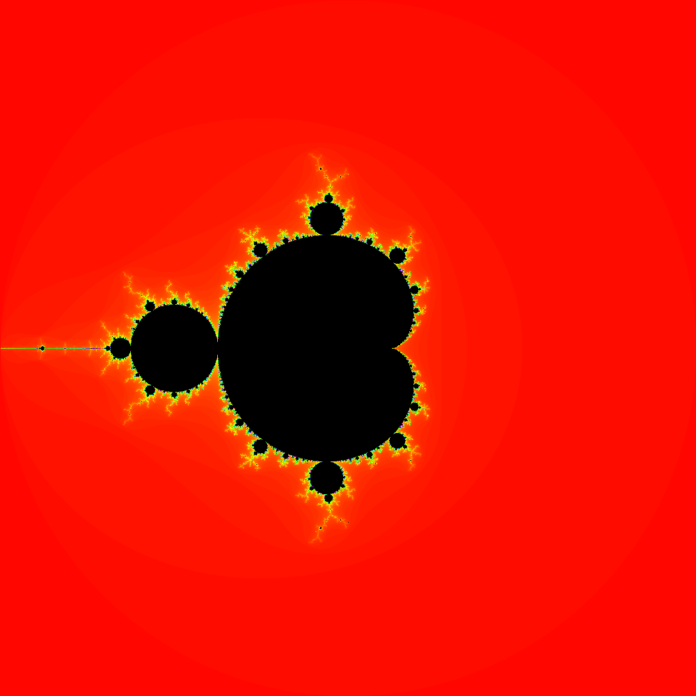

In order to demonstrate the thought processes that go into optimizing code, it is important to firstly to have a well defined problem to solve. The example chosen here is a Mandelbrot Set generator. This has the advantages of being well known and understood, suitable for optimization, small enough to explore the problem in a reasonable amount of time, and generates some pretty images. It has the disadvantage that there aren’t a lot algorithmic improvements that can be made, and is too small a problem to demonstrate any interesting data structures.

Definition of the Mandelbrot Set

Given a point (c) in the complex plane, it belongs in the Mandelbrot set if the recurrence relation (z_{n+1}=z_n+c, z_0=c) does not diverge. It can be demonstrated that not diverging is equivalent to testing (|z|leq 4) for all (n geq 0).

Unpacking this a little,

Because we don’t have a way of iterating an infinite number of times, let’s pick some maximum number of iterations (n_{max}).

For a point ((c_x,c_y)), start with (x=0, y=0) (this is equivalent to starting with (x=c_x, y=c_y), it will just require one extra iteration) and apply

(x_{n+1}={x_n}^2-{y_n}^2+c_x)

(y_{n+1}=2{x_n}{y_n}+c_y)

until (x_n^2+y_n^2>4) or (n>=n_{max}).

If (n_{max}) is reached, we say that a point is in the Mandelbrot Set, otherwise it is not. The higher the limit (n_{max}), the less the false positives.

It is customary when displaying this in an image to set the points in the Mandelbrot set to be black, and use a gradient of colors to represent how many iterations (n) it took to reach (x_n^2+y_n^2>4). It ends up looking like this for (-2 leq x,y < 2):

Parameters of problem

There are still some things I need to nail down. Firstly, what sort of hardware am I going to run this on? In particular, am I going to use a CPU, or a GPU with something like OpenCL or CUDA? In practice, this problem is embarrassingly parallel (yes, that is a technical term), which makes it highly suited to a GPU, but this post is about CPU optimization. In particular, I am going to use x86-64, with the assumption AVX2 is available. Some notes will be included for how this would transfer to other processors, such as ARM, POWER or RISC-V. The particular CPU I used is a Intel(R) Core(TM) i5-4340M CPU @ 2.90GHz (Haswell).

Numeric representation

There are two sorts of numbers that that need to be processed and/or stored: the point coordinates (x),(y), and the iteration count.

Representation of (x),(y)

What sort of numerical representation should be used for (x) and (y)? Options include 32 bit float, 64 bit double, 32, 64, 128 (or larger) fixed point numbers stored in integers. The higher the precision, the deeper it is possible to zoom into the set before rounding errors distort the images and make them grainy.

If fixed point integers are used, note that all numbers are in the range (-16 < x < 16), so 5 bits are needed for the integer part, the rest can be used for the fractional part.

| Type | Precision (bits) | Storage (bits) | Notes |

|---|---|---|---|

| float | ~24 | 32 | Precision depends on distance from 0 |

| double | ~53 | 64 | Precision depends on distance from 0 |

| 32 bit fixed | 27 | 32 | |

| 64 bit fixed | 59 | 64 | Limited AVX2 support |

| 128 bit fixed | 123 | 128 | No direct CPU support |

Note that the storage size isn’t going to matter until it becomes time to vectorize this – at which point the smaller sizes mean that more can be packed into a vector, which will dramatically help with speed.

I start by ruling out float and 32 fixed point – they don’t allow particularly high levels of zoom, and low zoom levels don’t require many levels of iteration, so a naive solution will already be very fast.

I also ruled out 128 bit integers or higher, as there is no direct CPU support for this, and the problem becomes that of dealing with high speed multiprecision arithmetic, which is an interesting problem, but takes me away from considering a variety of optimizations.

64 bit integers would look to be a better choice than doubles because of slightly higher precision, and is certainly my preference (I prefer to use fixed point to floating point where feasible as then I have much better control over error propagation), but there is a wrinkle: I am going to want to vectorize this, and x86-64 AVX2 does not directly support any way of multiplying vectors of 64 bit integers and getting the high part, which would make the problem expensive to vectorize (the same is true of ARM NEON), which would bring me back to dealing with multiprecision arithmetic to do multiplications.

For the rest of this discussion, I will use double. In practice this seems to limit the workable zoom level to about (2^{33} times image_width) which for 1000×1000 images means a maximum zoom of 8589934592000.

Representation of the number of iterations

Another thing to determine the size of the elements of the array that iteration counts are stored in – the two options are 16 bit integers and 32 bit integers. Smaller means less memory, but a smaller maximum number of iterations. If the problem was memory bound on access to this array this choice might be important for performance, but there is so much numerical processing going on that it’s not going to matter here. I punted on this decision by defining a type iterations_t that can be changed later, and set it to be a 32 bit unsigned integer for now.

typedef uint32_t iterations_t;

First do the simple version

The first step is to write a simple version. Starting with this has several advantages:

- It is a check that the problem is well understood,

- It can be used to provide a dataset for testing more complicated versions,

- I consider it good practice to leave a commented copy in our final code (or internal documentation or somewhere else accessible) so that the algorithm is clear when doing maintenance,

- It might be fast enough, in which case optimization is not even required.

Here is a naive version of a Mandelbrot set generator written in C++:

iterations_t mandelbrot_point(double cx, double cy, iterations_t m) {

iterations_t count = 0;

double x = 0, y = 0;

while(count < m && x * x + y * y <= 4.0) {

double nx = x * x - y * y + cx;

y = 2.0 * x * y + cy;

x = nx;

count++;

}

return count;

}

void mandelbrot_render_simple(iterations_t* p, double cx, double cy,

double zoom, int width, int height, iterations_t iterations) {

double xs = cx - 0.5 / zoom;

double ys = cy + 0.5 * height / (zoom * width);

double inc = 1.0 / (zoom * width);

for(int j = 0; j < height ; j++) {

iterations_t* py = p + j * width;

double y = ys - inc * j;

for(int i = 0; i < width; i++) {

py[i] = mandelbrot_point(xs + inc * i, y, iterations);

}

}

}

A note about mandelbrot_render_simple: It would be faster to do the following for the inner loop:

double x = xs;

for(int i = 0; i < width; i++) {

py[i] = mandelbrot_point(x, y, iterations);

x += inc;

}

This replaces xs + inc * i (an integer to double conversion, a multiply and an addition) by x += inc (addition), but if inc is much smaller than x, rounding errors will accumulate rapidly. This is an example of how slippery it can be to deal with floating point arithmetic - What Every Computer Scientist Should Know About Floating-Point Arithmetic by David Goldberg (ACM Computing Surveys 23 issue 1, 1991) should be required reading for anyone dealing with floating point in a non-trivial way. Also, the time spent in mandelbrot_render_simple is tiny compared to the time spent in mandelbrot_point, so there is very little benefit to this in any case.

Set up some tests

It is important to have some idea that our initial code works, and to set up some tests to measure performance with.

I picked four areas of the Mandelbrot set, zoom set at the effective maximum of 8589934592000 and max iterations of 50000, to use in testing, the first three (A,B,C) to represent the sort of workloads that might typically be found zooming in, and the fourth (D) to represent the slowest possible example.

| test | (c_x) | (c_y) | black | detail |

|---|---|---|---|---|

| A | -0.57245092932760 | 0.563219321276942 | lots | lots |

| B | -0.57245092932763 | 0.563219321276842 | little | lots |

| C | -0.57245092932663 | 0.563219321276852 | little | little |

| D | 0 | 0 | all | none |

Test A

Test B

Test C

Test D

For these tests, I used clang++-13, with compiler flags

-Werror -Wall -Wextra -std=c++20 -mavx2 -mfma -mtune=haswell

Tests are run on an Intel(R) Core(TM) i5-4340M CPU @ 2.90GHz with 16GB of RAM, on Debian Linux with KDE and most applications shut down. I ran each case 20 times, which is enough to give a rough speed comparison.

Initial results:

| Test A | Test B | Test C | Test D | |

|---|---|---|---|---|

| time (seconds) | 973.4 | 68.7 | 41.2 | 3550.7 |

| total iterations | 273760647440 | 19289413880 | 11543441620 | 1000000000000 |

Whether to optimize

I’ve done a lot of tramping in New Zealand (tramping is the word we use for hiking and backpacking), and many tracks have rivers to cross without the benefit of bridges. Part of the training for how to do this safely is to first ask yourselves (plural, because you are not doing this on your own, right?) the question “Do we cross?”, the point being that you never need to cross a river. Only after that do the other questions get considered: “Where do we cross?”, “How do we cross?”, etc.

Optimization is similar, that the first question should always be “Do I optimize?”. Clearly the advantages of optimization are that code may race faster, but there are reasons not to:

- The code might already be fast enough,

- The code might not be the bottleneck, so optimizing it will have little effect until the bottlenecks are dealt with,

- It takes a lot of engineering time to do well,

- Optimized code is generally much harder to maintain.

For the purposes of this discussion, I decide to go ahead with optimization.

Are there any compiler flags that will help?

It is good to get the compiler to do as much of the work as possible, so with that in mind, are there any optimization flags that might help that aren’t already covered by -O3?

One possibilty is -ffast-math for the mandelbrot_point function only. This is a flag to really be avoided unless you are sure of what you are doing, but this particular function is well behaved, there are no NaNs, no divisions or square roots, I don’t care about order of operations or associativity, and that loop by its nature is propagating rounding errors anyway, so -ffast-math may result in it just propagating different ones. In the next section, I am going to look at the assembler produced by the compiler, which is an extra check that ffast-math is safe to use.

Results with -ffast-math

| Test A | Test B | Test C | Test D | |

|---|---|---|---|---|

| time (seconds) | 932.5 | 65.9 | 39.5 | 3404.8 |

| total iterations | 273729279660 | 19298214380 | 11543330380 | 1000000000000 |

This is a small improvement. Note that the total number of iterations has changed slightly, reflecting the different rounding in the calculation, but these changes are tiny, and could be avoided by specifying the order of operations in the problem, or by using fixed point.

Look at the assembler

Decades ago, when CPU architectures were much simpler and compilers were not as good, it would sometimes be worth using assembler for tight inner loops. On some of the more modern CPUs, particularly the x86 family with “out of order execution”, “μops” and “ports”, the machine code instructions are converted into something that bears little resemblance to the original before being run. The compilers have been written to output code that gives very good performance, and are very hard to beat. I’m not saying it is impossible to do better - a person will have a better understanding of the problem being solved and may be able to take shortcuts that the compiler can’t, and a person can do at least as well as the compiler by using the compiler output as a starting point, but this would only be worth the effort in extreme cases, and would require a lot of trial and error and testing for possibly small gains, and even then there is the risk that something that works great on one processor performs badly on another processor in the same family. It is also much harder to maintain. I chose not to go that route for this problem, and let a compiler do that level of lifting.

On the other hand, it is important to be aware at some level of what is going on, both at the assembly and architecture level. I can’t recommend Agner Fog’s Software Optimization Resources highly enough for the x86 family of microprocessors. If there are equivalent resources for ARM (I know this is unlikely, as there are a lot more variety in ARM chips), I would be delighted to find out where they are.

For a tight loop it is very much worth looking at the assembler produced by the compiler (particularly after using -ffast-math to check that hasn’t had unexpected consequences). This can be done either with the -S flag with the compiler (gcc or clang, other compilers will have equivalents) or use the Compiler Explorer. The compiler explorer also has the advantages of showing how different compilers handle the same code.

Doing this with mandelbrot_point with -ffast-math, the result is (just showing the inner loop, and I’ve line numbered and annotated it):

0 .LBB0_3

1 vmulsd %xmm4, %xmm4, %xmm6 x_squared = x * x

2 vmulsd %xmm2, %xmm2, %xmm5 y_squared = y * y

3 vaddsd %xmm6, %xmm5, %xmm7 mag_squared=x_squared + y_squared

4 vucomisd %xmm3, %xmm7 branch if mag_squared > 4.0

5 ja .LBB0_6

6 vaddsd %xmm0, %xmm6, %xmm6 x_squared_plus_cx = x_squared + cx

7 vaddsd %xmm4, %xmm4, %xmm4 x_times_2 = x + x

8 vsubsd %xmm5, %xmm6, %xmm5 x_next = x_squared_plus_cx - y_squared

9 vfmadd213sd %xmm1, %xmm4, %xmm2 y = x_times_2 * y + cy

10 incl %eax count++

11 vmovapd %xmm5, %xmm4 x =x _next

12 cmpl %eax, %edi branch if count != m

13 jne .LBB0_3

This looks really efficient, and there a doesn’t seem to be anything bad introduced by -ffast-math - mostly it just allowed a multiply and an add to be bundled into one vfmadd213sd instruction, and flexibility about the associativity of 2*x*y.

Sheep race optimization

Anyone that has spent any time around sheep will know that they generally try to cluster together and move away from people. Say that you have a race of sheep that you want to move forwards.

The obvious thing to try (option a in the diagram) is to stand at the back in the hope that they will all move away from you. That doesn’t work, as the back sheep are blocked from moving by the sheep in front of them (they may bunch up a little), and the front sheep don’t want to move as you are too far away to be a threat, and they don’t want to separate from the rest of the sheep behind them.

What does work (option b in the diagram) is to start in front of all the sheep and walk backwards along the race. As you walk past the front sheep, they will move forwards to get away from you, followed by the next, then the next, until you are at the back and they are all moving forwards.

This concept of moving backwards to unstick a pipeline motivates the next optimization - reversing the order of instructions to stretch the distance between dependent instructions, so that the pipeline stays full.

Looking at a portion of the Instruction tables: Lists of instruction latencies, throughputs and micro-operation breakdowns for Intel, AMD and VIA CPUs for Haswell (other generations are similar enough that the same reasoning still applies):

| Instruction | Ports | Latency | Reciprocal Throughput |

|---|---|---|---|

| vaddsd/vsubsd | p1 | 3 | 1 |

| vucomisd | p1 | 3 | 1 |

| vmulsd | p01 | 5 | 0.5 |

| vfmadd213sd | p01 | 5 | 0.5 |

and looking at the assembly above, there are a lot of stalls going on, for instance %xmm5 is generated on line 1, but won’t be ready for line 2 until 5 clock cycles later, and %xmm4 is generated on line 7 and used on line 9. The processor internally will be able to mitigate this a little by out of order execution.

The loop structure (using <- to denote “depends on”) is something like:

while(D) {

A <- B

B <- A

C <- A,B

D <- C

}

where A and B represent the calculation of the next x and y, C and D represent the calculation and comparison of mag_squared to 4.

If calculations of C and D are reordered so that the gap between them and what they depend on is as long as possible, it changes to

while(D) {

D <- C

C <- A,B

A <- B

B <- A

}

D now has nearly a full loop between being calculated and being needed (although will be one loop behind), as does C.

I call this optimization a ‘sheep race’ - there is almost certainly a more common name for that, and I’d welcome being told what it is.

Turning this into code (incidentally, count has been changed to decrease rather than increase - this results in no change in performance, but will help later):

iterations_t mandelbrot_sheeprace(double cx, double cy, iterations_t m) {

double x = 0, y = 0, x_squared = 0, y_squared = 0, mag_squared = 0;

iterations_t count = m + 2;

while(count != 0 && mag_squared <= 4.0) {

mag_squared = x_squared + y_squared;

y_squared = y * y;

x_squared = x * x;

double newx = x_squared - y_squared + cx;

y = 2 * y * x + cy;

x = newx;

count--;

}

return m - count;

}

This now has the comparison of the mag_squared to 4 occur before the calculation of the next value of mag_squared, and the calculation of of mag_squared to occur before the calculation of the next values of x_squared and y_squared. As a result, the value used into comparison is now two loops behind, and count has to be adjusted accordingly (hence the m + 2). The extra two x,y values generated are harmless, and now the dependencies are further apart.

| Test A | Test B | Test C | Test D | |

|---|---|---|---|---|

| time (seconds) | 674 | 47.7 | 28.7 | 2462.3 |

| total iterations | 273743913640 | 19294157420 | 11543469820 | 1000000000000 |

This is a substantial improvement, and the only cost is that the code is less obvious.

Interleaving

Another thing to do to fill in the pipeline bubbles is to do the calculations for two points at the same time. I will call each calculation a stream, so there are two streams, (s_1) and (s_2). One loop handles the case of both streams (s_1) and (s_2) running, and the other loop handles the case where one of the streams has finished and only (s_1) is running. If (s_1) finishes before (s_2), the (s_1) result gets returned, and the contents of (s_2) get transferred to (s_1). The pipeline terminates when all streams are finished.

void mandelbrot2_sheeprace(double s1_cx, double s1_cy, double s2_cx, double s2_cy, uint32_t m,

iterations_t* s1_r, iterations_t* s2_r) {

double s1_x = 0, s1_y = 0, s1_x_squared = 0, s1_y_squared = 0, s1_mag_squared = 0;

double s2_x = 0, s2_y = 0, s2_x_squared = 0, s2_y_squared = 0, s2_mag_squared = 0;

iterations_t count = m + 2;

while(count != 0) {

if(s2_mag_squared > 4.0) {

// write out stream 1

*s2_r = m - count;

// now only stream 0 left

goto single_point;

}

if(s1_mag_squared > 4.0) {

// write out stream 0

*s1_r = m - count;

// transfer stream 1 to stream 0

s1_r = s2_r;

s1_x = s2_x;

s1_y = s2_y;

s1_cx = s2_cx;

s1_cy = s2_cy;

s1_x_squared = s2_x_squared;

s1_y_squared = s2_y_squared;

s1_mag_squared = s2_mag_squared;

// now only stream 0 left

goto single_point;

}

s1_mag_squared = s1_x_squared + s1_y_squared;

s2_mag_squared = s2_x_squared + s2_y_squared;

count--;

s1_y_squared = s1_y * s1_y;

s2_y_squared = s2_y * s2_y;

s1_x_squared = s1_x * s1_x;

s2_x_squared = s2_x * s2_x;

s1_y = 2 * s1_y * s1_x + s1_cy;

s2_y = 2 * s2_y * s2_x + s2_cy;

s1_x = s1_x_squared - s1_y_squared + s1_cx;

s2_x = s2_x_squared - s2_y_squared + s2_cx;

}

*s1_r = m;

*s2_r = m;

return;

single_point:

while(count != 0 && s1_mag_squared <= 4.0) {

s1_mag_squared = s1_x_squared + s1_y_squared;

count--;

s1_y_squared = s1_y * s1_y;

s1_x_squared = s1_x * s1_x;

s1_y = 2 * s1_y * s1_x + s1_cy;

s1_x = s1_x_squared - s1_y_squared + s1_cx;

}

*s1_r = m - count;

}

The corresponding outer loop is a straightforward extension of mandelbrot_render_simple - points are just processed two at a time:

template void mandelbrot_render_simple2(iterations_t* p, double cx, double cy,

double zoom, uint32_t width, uint32_t height, uint32_t iterations) {

double xs = cx-0.5 / zoom;

double ys = cy + 0.5 * height / (zoom * width);

double inc = 1.0 / (zoom * width);

for(uint32_t j = 0; j < height; j++) {

iterations_t* py=p + j * width;

double y=ys - inc * j;

for(uint32_t i=0; i < width; i += 2) {

F(xs + inc * i, y, xs + inc * (i + 1), y, iterations,

&py[i], &py[i + 1]);

}

}

}

This can be expanded to work with (n) streams (s_1 cdots s_n). Check the github repository for examples with 3 or 4 streams.

Here is a diagram, showing a pipeline working with 4 streams. Note that stream (s_4) transfers to stream (s_2) then (s_1) as they finish.

Results with pipeline of width 2

| #streams | Test A | Test B | Test C | Test D | |

|---|---|---|---|---|---|

| time (seconds) | 2 | 433.7 | 30.6 | 18.2 | 1562.7 |

| total iterations | 2 | 273729279660 | 19298214380 | 11543330380 | 1000000000000 |

| time (seconds) | 3* | 393.7 | 28.0 | 16.7 | 1424.2 |

| total iterations | 3* | 273773335620 | 19336345300 | 11565894240 | 1020000000000 |

| time (seconds) | 4 | 393.2 | 27.9 | 16.7 | 1424.4 |

| total iterations | 4 | 273729279660 | 19298214380 | 11543330380 | 1000000000000 |

* - There are edge effects where the number of streams does not evenly divide the row width, resulting in slightly overflowing the rows in this case (I made the array p a little larger so this would not result in undefined behavior). This would be relatively easy to deal with, but the approach taken in the next section won’t have this issue.

This is a substantial speed improvement - the speed increase is now ~2.5x for a pipeline with 3 or 4 streams, but there are diminishing returns for increasing the number of streams. Possible reasons for this:

-

As more streams are added, the calculations are interleaved, the time between dependent calculations gets to be greater than or equal to the latency, and which point adding streams contributes nothing.

-

More streams requires more active variables. Ideally the inner loop variables should all occur in CPU registers - if there are more variables needed at any given moment than there are registers, the compiler will spill these values to memory, which is slower. The x86_64 family has 16 AVX registers, which are the ones used for floating point (AVX-512 increases that number to 32). PowerPC, ARM and RISC-V have 32 of each register type, so could possibly handle a larger number of streams.

-

The way that this code is set up requires an extra loop for each possible number of active streams (except zero, in which case the function returns), so if there are (n) streams, there are (n) loops. This results in the code size being (O(n^2)). Increased code size might put pressure on the execution and branch caches on the CPU - although the code would have to get rather large for this to happen on modern x86-64 CPUs.

-

The function doesn’t return until all the streams are done, so if one stream takes much longer than the others, the other streams won’t be processing (the black area in the figure above), which means that resources are sitting idle.

Interleaving, take two

The next step is to use SIMD to set up more streams, but the above approach won’t scale to this - the cases in the code would get huge.

The problem can be restructured be inverting the order of the loops and putting what was the inner loop (mandelbrot_point) on the outside, and turning what was the outer loop (mandelbrot_render_simple) into a state engine to get the next point to process:

First the state engine, which simply iterates through the points (there is a wrinkle with the tail which will be described below, but can be assumed to be zero and ignored for now):

class MandelbrotStateEngineSimple {

public:

MandelbrotStateEngineSimple(iterations_t* pp, int pwidth, int pheight,

double pxs, double pys, double pinc, int ptail) {

p = pp;

width = pwidth;

height = pheight;

inc = pinc;

xs = pxs;

ys = pys;

w = 0;

h = 0;

tail = ptail;

};

// get_next point sets px, py, x, y and returns true if there

// is a next point, returns false otherwise

bool get_next_point(double& px, double& py, int&x, int& y) {

if(h == height) {

if(tail == 0) {

return false;

}

tail--;

px = py = 0;

// dummy point

x = 0;

y = height;

return true;

}

x = w;

y = h;

px = xs + w * inc;

py = ys - h * inc;

w++;

if(w >= width) {

w = 0;

h++;

};

return true;

}

private:

iterations_t* p;

int width;

int height;

int w;

int h;

double xs;

double ys;

double inc;

int tail;

};

Here is a simple render loop that uses this state engine - it first calls get_next_point to get the first point, then every time a point is finished, it writes it and gets the next point.

void mandelbrot_render1(iterations_t* p, double cen_x, double cen_y,

double zoom, int width, int height, iterations_t iterations) {

double xs = cen_x - 0.5 / zoom;

double ys = cen_y + 0.5 * height / (zoom * width);

double inc = 1.0 / (zoom * width);

MandelbrotStateEngineSimple mse(p, width, height, xs, ys, inc, 0);

double x = 0, y = 0, x_squared = 0, y_squared = 0;

double mag_squared = 0;

iterations_t count = iterations;

int dx = 0, dy = 0;

double cx = 0, cy = 0;

mse.get_next_point(cx, cy, dx, dy);

while(true) {

if(count == 0 || mag_squared > 4) {

p[dx + dy * width] = iterations - count;

if(!mse.get_next_point(cx, cy, dx, dy)) {

return;

}

// reset the iterators

x = y = 0;

count = iterations;

}

count--;

y = 2 * y * x + cy;

x = x_squared - y_squared + cx;

x_squared = x * x;

y_squared = y * y;

mag_squared = x_squared + y_squared;

}

}

The extension of this to multiple streams is easy - each stream is primed with a call to get_next_point, and each time a point in a stream is finished, it is written out and replaced by the next point, which other streams keep going.

void mandelbrot_render2_sheeprace(iterations_t* p, double cen_x, double cen_y,

double zoom, int width, int height, iterations_t iterations) {

double xs = cen_x - 0.5 / zoom;

double ys = cen_y + 0.5 * height / (zoom * width);

double inc = 1.0 / (zoom * width);

MandelbrotStateEngineSimple mse(p, width, height, xs, ys, inc, 1);

double s1_x = 0, s1_y = 0, s1_x_squared = 0, s1_y_squared = 0, s1_mag_squared = 0;

double s2_x = 0, s2_y = 0, s2_x_squared = 0, s2_y_squared = 0, s2_mag_squared = 0;

iterations_t s1_count = iterations + 2;

iterations_t s2_count = iterations + 2;

int s1_dx = 0, s1_dy = 0;

int s2_dx = 0, s2_dy = 0;

double s1_cx = 0, s1_cy = 0;

double s2_cx = 0, s2_cy = 0;

mse.get_next_point(s1_cx, s1_cy, s1_dx, s1_dy);

mse.get_next_point(s2_cx, s2_cy, s2_dx, s2_dy);

while(true) {

if(s1_count == 0 || s1_mag_squared > 4) {

p[s1_dx + s1_dy * width] = iterations - s1_count;

if(!mse.get_next_point(s1_cx, s1_cy, s1_dx, s1_dy)) {

return;

}

s1_x = s1_y = s1_x_squared = s1_y_squared = s1_mag_squared = 0;

s1_count = iterations + 2;

}

if(s2_count == 0 || s2_mag_squared > 4) {

p[s2_dx + s2_dy * width] = iterations - s2_count;

if(!mse.get_next_point(s2_cx, s2_cy, s2_dx, s2_dy)) {

return;

}

s2_x = s2_y = s2_x_squared = s2_y_squared = s2_mag_squared = 0;

s2_count = iterations + 2;

}

s1_mag_squared = s1_x_squared + s1_y_squared;

s2_mag_squared = s2_x_squared + s2_y_squared;

s1_count--;

s2_count--;

s1_y_squared = s1_y * s1_y;

s2_y_squared = s2_y * s2_y;

s1_x_squared = s1_x * s1_x;

s2_x_squared = s2_x * s2_x;

s1_y = 2 * s1_y * s1_x + s1_cy;

s2_y = 2 * s2_y * s2_x + s2_cy;

s1_x = s1_x_squared - s1_y_squared + s1_cx;

s2_x = s2_x_squared - s2_y_squared + s2_cx;

}

}

This is what the pipeline looks like:

Note that the order of entry of points to the pipeline may not be the same as the order of exit. For instance, a enters the pipeline before b, but b finishes before a.

There needs to be some care taken when there are no more points to process - if the function exits immediately, there may be other points left in the pipeline that don’t get processed. The solution to this is to flush the pipeline at the end by processing some extra points. First the array p is extended by one to be of size width*height+1, so that the last index width*height corresponding to (0,height) can be used to dump the flush values. If if there are (n) streams, then (n+1) points that take the maximum number of iterations ((c_x=0), (c_y=0) works for this ) is sufficient to flush the pipeline. The tail parameter is used to insert these pipeline flushing points.

| Test A | Test B | Test C | Test D | |

|---|---|---|---|---|

| render1 | 934.5 | 66.1 | 39.6 | 3406.9 |

| render1_sheeprace | 855.5 | 60.6 | 36.4 | 3124.9 |

| render2 | 467.9 | 33.3 | 20.1 | 1706.1 |

| render2_sheeprace | 428.5 | 30.4 | 18.3 | 1567.9 |

| render3 | 494.6 | 35.1 | 21.0 | 1807.4 |

| render3_sheeprace | 520.2 | 39.9 | 22.1 | 1899.6 |

| render4 | 527.4 | 37.4 | 22.4 | 1923.0 |

| render4_sheeprace | 560.8 | 40.1 | 24.1 | 2044.3 |

One surprising thing from these results is that the sweet spot seems to be 2 streams, whereas the previous pipeline had a sweet spot of 3 streams. Looking at the assembler, get_next_point is getting inlined, which adds to the register pressure, which leads to more register spills with 3 streams. I speculate that the slowdown is enough to make 3 streams less competitive than two.

Vectorizing

Now there is enough of a framework to use all the parts of the AVX vector, not just the one lane that the previous code has been using. AVX vectors are 256 bits wide, which allows SIMD on 4 doubles at the same time. I’m going to borrow from ARM terminology and call each of these 4 components a lane.

There are cases which the compiler can auto-vectorize code, but I have not explored whether it is possible in this case, so I use the Intel Intrinsics here.

The test for whether a stream has finished needs some effort to make fast - it would be expensive to test each component of the vector one at a time, so I want one test for each vector. One of my favorite intrinsics is _mm256_movemask_pd which uses the vmovmskpd instruction. _mm256_movemask_pd takes the top bit of each 64 bit component of a vector and packs them into the low 4 bits of an integer. The goal is to get the expression (count!=0 && mag_squared=<4.0) to set the high bit to 1 if it succeeds, 0 otherwise.

Firstly, I modified the count loop a little by reducing the value by one, so instead of checking count!=0 the check is changed to count>=0, which will set the high bit when it is time to exit. The _mm256_cmp_pd intrinsic will take care of the mag_squared=<4.0. Then or-ing these together will make the test ready for _mm256_movemask_pd.

Once the end of a stream is detected, the result needs to be written and get_next_point invoked. Both x86-64 AVX2 and ARM NEON support extracting a value from one lane of a vector, but neither of them support the lane chosen to be a variable, so my solution is to store all the vectors to memory, do the setup for the next point from there, then load the memory back into the registers. This is expensive, but for deep zooms will happen infrequently compared to the case where a normal iteration happens.

ARM notes: ARM NEON only has 128 bit vectors so there are only two lanes per vector, the intrinsics documentation is here, and there is no direct vmovmskpd instruction on ARM, but the equivalent can be performed with a few instructions. Apart from that, the ARM NEON code will have pretty similar steps.

void render_avx_sheeprace2(iterations_t* p, double cen_x, double cen_y,

double zoom, int width, int height, iterations_t iterations) {

double xs = cen_x - 0.5 / zoom;

double ys = cen_y + 0.5 * height / (zoom * width);

double inc = 1.0 / (zoom * width);

MandelbrotStateEngineSimple mse(p, width, height, xs, ys, inc, 7);

iterations--;

__m256d s1_x = _mm256_setzero_pd();

__m256d s1_y = _mm256_setzero_pd();

__m256d s1_x_squared = _mm256_setzero_pd();

__m256d s1_y_squared = _mm256_setzero_pd();

__m256d s1_mag_squared = _mm256_setzero_pd();

__m256i s1_count = _mm256_set1_epi64x(iterations + 2);

__m256d s2_x = _mm256_setzero_pd();

__m256d s2_y = _mm256_setzero_pd();

__m256d s2_x_squared = _mm256_setzero_pd();

__m256d s2_y_squared = _mm256_setzero_pd();

__m256d s2_mag_squared = _mm256_setzero_pd();

__m256i s2_count = _mm256_set1_epi64x(iterations + 2);

__m256i one_int64 = _mm256_set1_epi64x(1);

__m256d four = _mm256_set1_pd(4.0);

int s1_dx[4] = {}, s1_dy[4] = {};

int s2_dx[4] = {}, s2_dy[4] = {};

double cx_mem[4] __attribute__((__aligned__(32)));

double cy_mem[4] __attribute__((__aligned__(32)));

mse.get_next_point(cx_mem[0], cy_mem[0], s1_dx[0], s1_dy[0]);

mse.get_next_point(cx_mem[1], cy_mem[1], s1_dx[1], s1_dy[1]);

mse.get_next_point(cx_mem[2], cy_mem[2], s1_dx[2], s1_dy[2]);

mse.get_next_point(cx_mem[3], cy_mem[3], s1_dx[3], s1_dy[3]);

__m256d s1_cx = *(__m256d*)cx_mem;

__m256d s1_cy = *(__m256d*)cy_mem;

mse.get_next_point(cx_mem[0], cy_mem[0], s2_dx[0], s2_dy[0]);

mse.get_next_point(cx_mem[1], cy_mem[1], s2_dx[1], s2_dy[1]);

mse.get_next_point(cx_mem[2], cy_mem[2], s2_dx[2], s2_dy[2]);

mse.get_next_point(cx_mem[3], cy_mem[3], s2_dx[3], s2_dy[3]);

__m256d s2_cx = *(__m256d*)cx_mem;

__m256d s2_cy = *(__m256d*)cy_mem;

while(true) {

__m256d cmp2;

cmp2 = _mm256_or_pd((__m256d)s1_count, _mm256_cmp_pd(s1_mag_squared, four, _CMP_GT_OS));

if(!_mm256_testz_pd(cmp2, cmp2)) {

int mask = _mm256_movemask_pd(cmp2);

_mm256_store_pd(cx_mem, s1_cx);

_mm256_store_pd(cy_mem, s1_cy);

double s1_x_mem[4] __attribute__((__aligned__(32)));

double s1_y_mem[4] __attribute__((__aligned__(32)));

uint64_t s1_count_mem[4] __attribute__((__aligned__(32)));

double s1_x_squared_mem[4] __attribute__((__aligned__(32)));

double s1_y_squared_mem[4] __attribute__((__aligned__(32)));

_mm256_store_pd(s1_x_mem, s1_x);

_mm256_store_pd(s1_y_mem, s1_y);

_mm256_store_si256((__m256i*)s1_count_mem, s1_count);

_mm256_store_pd(s1_x_squared_mem, s1_x_squared);

_mm256_store_pd(s1_y_squared_mem, s1_y_squared);

while(mask != 0) {

int b = __builtin_ctz(mask);

p[s1_dx[b] + s1_dy[b] * width] = iterations - s1_count_mem[b];

if(!mse.get_next_point(cx_mem[b], cy_mem[b], s1_dx[b], s1_dy[b])) {

return;

}

s1_count_mem[b] = iterations + 2;

s1_x_mem[b] = s1_y_mem[b] = s1_x_squared_mem[b] = s1_y_squared_mem[b] = 0;

mask = mask&~(1U<| Test A | Test B | Test C | Test D | |

|---|---|---|---|---|

| render1_avx | 215.6 | 15.6 | 9.5 | 786.7 |

| render1_avx_sheeprace | 207.9 | 15.1 | 9.2 | 750.0 |

| render2_avx | 126.3 | 9.3 | 5.8 | 458.3 |

| render2_avx_sheeprace | 119.7 | 8.8 | 5.4 | 436.8 |

| render3_avx | 131.6 | 9.7 | 6.0 | 479.2 |

| render3_avx_sheeprace | 131.2 | 9.8 | 6.0 | 480.6 |

render2_avx_sheeprace is the clear winner here, which is not a great surprise given the earlier results.

Conclusion

render2_avx_sheeprace with test D is running ~2.3 billion iterations/second on a single core. On a 2.9 GHz processor, that’s an average of 10.13 clock cycles/iteration for each of 8 streams.

Comparing this with the naive implementation right at the start:

| Test A | Test B | Test C | Test D | |

|---|---|---|---|---|

| naive | 973.4 | 68.7 | 41.2 | 3550.7 |

| render2_avx_sheeprace | 119.7 | 8.8 | 5.4 | 436.8 |

| speed multiplier | 8.1 | 7.8 | 7.6 | 8.1 |

So using a looking carefully at the problem, knowing about the machine architecture and using variety of optimization techniques, I have managed to get a speed improvement of about ~8x.

Here is a rough breakdown of of how much each technique contributes to the best result:

| multiplier | |

|---|---|

| -ffast-math | 1.04 |

| Sheep race | 1.09 |

| Interleaving (2) | 2.0 |

| Vectorization | 3.6 |

Apart from vectorization and interleaving using the same framework with pipelining and a state engine, these are largely independent of each other.

I’m sure that this is not the final improvement that could be made in this area - I welcome any other suggestions, and please feel free to contact me.

There will be a part 2 to this - in that, I will discuss alternatives to the MandelbrotStateEngineSimple state engine that can prune the number of points to calculate, and make some observations about multithreading. This will result in another substantial speed improvement on top of what I have already presented (getting to ~100x in some cases) using these techniques.

Any comments, corrections, observations, or optimizations that I missed? Please feel free to contact me