22 Aug 2023

Conformant OpenGL®

ES 3.1 drivers are now available for M1- and M2-family GPUs. That means

the drivers are compatible with any OpenGL ES 3.1 application.

Interested? Just install

Linux!

For existing Asahi Linux users,

upgrade your system with dnf (Fedora) or

upgradepacman (Arch) for the latest drivers.

-Syu

Our reverse-engineered, free and open source graphics

drivers are the world’s only conformant

OpenGL ES 3.1 implementation for M1- and M2-family graphics hardware.

That means our driver passed tens of thousands of tests to demonstrate

correctness and is now recognized by the industry.

To become conformant, an “implementation” must pass the official

conformance test suite, designed to verify every feature in the

specification. The test results are submitted to Khronos, the standards

body. After a 30-day review

period, if no issues are found, the implementation becomes

conformant. The Khronos website lists all conformant implementations,

including our drivers for the M1,

M1

Pro/Max/Ultra, M2,

and M2

Pro/Max.

Today’s milestone isn’t just about OpenGL ES. We’re releasing the

first conformant implementation of any graphics standard for

the M1. And we don’t plan to stop here 😉

Unlike ours, the manufacturer’s M1 drivers are unfortunately not

conformant for any standard graphics API, whether Vulkan or

OpenGL or OpenGL ES. That means that there is no guarantee that

applications using the standards will work on your M1/M2 (if you’re not

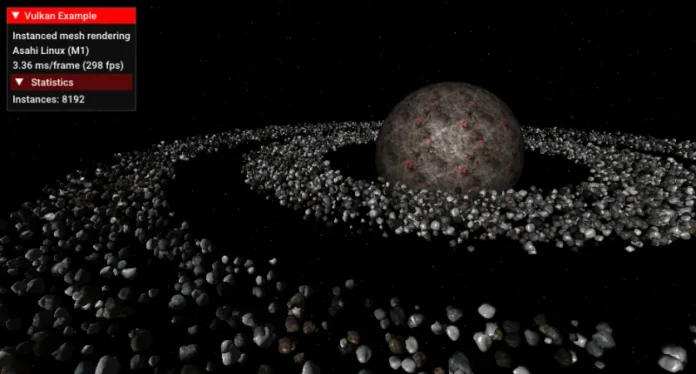

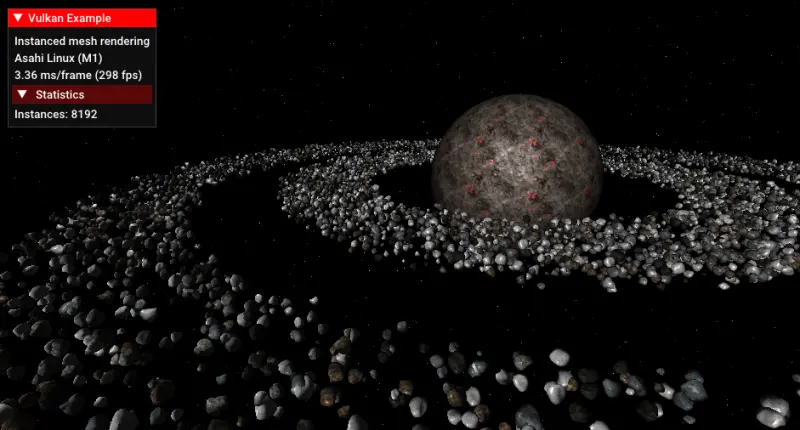

running Linux). This isn’t just a theoretical issue. Consider Vulkan.

The third-party MoltenVK layers a

subset of Vulkan on top of the proprietary drivers. However, those

drivers lack key functionality, breaking valid Vulkan applications. That

hinders developers and users alike, if they haven’t yet switched their

M1/M2 computers to Linux.

Why did we pursue standards conformance when the

manufacturer did not? Above all, our commitment to quality. We want our

users to know that they can depend on our Linux drivers. We want

standard software to run without M1-specific hacks or porting. We want

to set the right example for the ecosystem: the way forward is

implementing open standards, conformant to the specifications, without

compromises for “portability”. We are not satisfied with proprietary

drivers, proprietary APIs, and refusal to implement standards. The rest

of the industry knows that progress comes from cross-vendor

collaboration. We know it, too. Achieving conformance is a win for our

community, for open source, and for open graphics.

Of course, Asahi Lina and I

are two individuals with minimal funding. It’s a little awkward that we

beat the big corporation…

It’s not too late though. They should follow our lead!

OpenGL ES 3.1 updates the experimental OpenGL ES

3.0 and OpenGL 3.1 we shipped in June. Notably, ES 3.1 adds compute

shaders, typically used to accelerate general computations within

graphics applications. For example, a 3D game could run its physics

simulations in a compute shader. The simulation results can then be used

for rendering, eliminating stalls that would otherwise be required to

synchronize the GPU with a CPU physics simulation. That lets the game

run faster.

Let’s zoom in on one new feature: atomics on images. Older versions

of OpenGL ES allowed an application to read an image in order to display

it on screen. ES 3.1 allows the application to write to the

image, typically from a compute shader. This new feature enables

flexible image processing algorithms, which previously needed to fit

into the fixed-function 3D pipeline. However, GPUs are massively

parallel, running thousands of threads at the same time. If two threads

write to the same location, there is a conflict: depending which thread

runs first, the result will be different. We have a race condition.

“Atomic” access to memory provides a solution to race conditions.

With atomics, special hardware in the memory subsystem guarantees

consistent, well-defined results for select operations, regardless of

the order of the threads. Modern graphics hardware supports various

atomic operations, like addition, serving as building blocks to complex

parallel algorithms.

Can we put these two features together to write to an image

atomically?

Yes. A ubiquitous OpenGL ES extension,

required for ES 3.2, adds atomics operating on pixels in an image. For

example, a compute shader could atomically increment the value at pixel

(10, 20).

Other GPUs have dedicated instructions to perform atomics on an

images, making the driver implementation straightforward. For us, the

story is more complicated. The M1 lacks hardware instructions for image

atomics, even though it has non-image atomics and non-atomic images. We

need to reframe the problem.

The idea is simple: to perform an atomic on a pixel, we instead

calculate the address of the pixel in memory and perform a regular

atomic on that address. Since the hardware supports regular atomics, our

task is “just” calculating the pixel’s address.

If the image were laid out linearly in memory, this would be

straightforward: multiply the Y-coordinate by the number of bytes per

row (“stride”), multiply the X-coordinate by the number of bytes per

pixel, and add. That gives the pixel’s offset in bytes relative to the

first pixel of the image. To get the final address, we add that offset

to the address of the first pixel.

Alas, images are rarely linear in memory. To improve cache

efficiency, modern graphics hardware interleaves the X- and

Y-coordinates. Instead of one row after the next, pixels in memory

follow a spiral-like

curve.

We need to amend our previous equation to interleave the coordinates.

We could use many instructions to mask one bit at a time, shifting to

construct the interleaved result, but that’s inefficient. We can do

better.

There is a well-known “bit

twiddling” algorithm to interleave bits. Rather than shuffle one bit

at a time, the algorithm shuffles groups of bits, parallelizing the

problem. Implementing this algorithm in shader code improves

performance.

In practice, only the lower 7-bits (or less) of each coordinate are

interleaved. That lets us use 32-bit instructions to “vectorize” the

interleave, by putting the X- and Y-coordinates in the low and high

16-bits of a 32-bit register. Those 32-bit instructions let us

interleave X and Y at the same time, halving the instruction count.

Plus, we can exploit the GPU’s combined shift-and-add instruction.

Putting the tricks together, we interleave in 10 instructions of M1 GPU

assembly:

# Inputs x, y in r0l, r0h.

# Output in r1.

add r2, #0, r0, lsl 4

or r1, r0, r2

and r1, r1, #0xf0f0f0f

add r2, #0, r1, lsl 2

or r1, r1, r2

and r1, r1, #0x33333333

add r2, #0, r1, lsl 1

or r1, r1, r2

and r1, r1, #0x55555555

add r1, r1l, r1h, lsl 1We could stop here, but what if there’s a dedicated

instruction to interleave bits? PowerVR has a “shuffle” instruction shfl,

and the M1 GPU borrows from PowerVR. Perhaps that instruction was

borrowed too. Unfortunately, even if it was, the proprietary compiler

won’t use it when compiling our test shaders. That makes it difficult to

reverse-engineer the instruction – if it exists – by observing compiled

shaders.

It’s time to dust off a powerful reverse-engineering technique from

magic kindergarten: guess and check.

Dougall Johnson

provided the guess. When considering the instructions we already know

about, he took special notice of the “reverse bits” instruction. Since

reversing bits is a type of bit shuffle, the interleave instruction

should be encoded similarly. The bit reverse instruction has a two-bit

field specifying the operation, with value 01. Related

instructions to count the number of set bits and find the

first set bit have values 10 and 11

respectively. That encompasses all known “complex bit manipulation”

instructions.

00 |

? ? ? |

01 |

Reverse bits |

10 |

Count set bits |

11 |

Find first set |

There is one value of the two-bit enumeration that is unobserved and

unknown: 00. If this interleave instruction exists, it’s

probably encoded like the bit reverse but with operation code

00 instead of 01.

There’s a difficulty: the three known instructions have one single

input source, but our instruction interleaves two sources. Where does

the second source go? We can make a guess based on symmetry. Presumably

to simplify the hardware decoder, M1 GPU instructions usually encode

their sources in consistent locations across instructions. The other

three instructions have a gap where we would expect the second source to

be, in a two-source arithmetic instruction. Probably the second source

is there.

Armed with a guess, it’s our turn to check. Rather than handwrite GPU

assembly, we can hack our compiler to replace some two-source integer

operation (like multiply) with our guessed encoding of “interleave”.

Then we write a compute shader using this operation (by “multiplying”

numbers) and run it with the newfangled compute support in our

driver.

All that’s left is writing a shader that

checks that the mystery instruction returns the interleaved result for

each possible input. Since the instruction takes two 16-bit sources,

there are about 4 billion

()

inputs. With our driver, the M1 GPU manages to check them all in under a

second, and the verdict is in: this is our interleave instruction.

As for our clever vectorized assembly to interleave coordinates? We

can replace it with one instruction. It’s anticlimactic, but it’s fast

and it passes the conformance tests.

And that’s what matters.

Thank you to Khronos and

Software in the Public Interest

for supporting open drivers.